Reference

To refer to Little AI, Please use the following reference:

Georgeon O. (2017). Little AI: Playing a constructivist robot. SoftwareX, Volume 6, pages 161-164.

Science

Current research in Artificial Intelligence (AI) is facing many challenges that remain to be solved if we want to create an artificial system capable of exhibiting cognitive behaviors. But what exactly do we mean by cognitive behaviors ? Clearly, cognitive behaviors involve more than just solving predefined problems or maximizing a numerical value.

When you play Little AI, you generate behaviors that require different kinds of cognitive skills. Creating an AI involves implementing those skills in an artificial system. Little AI makes you exercise those skills to better understand the challenge it is to replicate them in an artificial system.

Below is an introductory list of the cognitive skills and features that Little AI makes you exercice, and that we are trying to implement in an artificial system.

Desiderata for a developmental artificial intelligence

David Vernon well summarized the challenges faced by developmental AI in a talk he gave at Fierces on BICA. He listed ten desiderata for a developmental AI:

- Rich sensorimotor embodiment

- Value system, drives, motives

- Learn sensorimotor contingences

- Perceptual categorization

- Spatial and selective attention

- Prospective actions

- Memory

- Internal simulation

- Learning

- Constitutive autonomy

In particular, the citations below summarize two important aspects of these desiderata:

- Cognitive systems must master the laws of their sensorimotor contingencies.

- Higher-level intelligence (reasoning, language) builds on lower-level capabilities of sensorimotor inference.

Mastering the laws of sensorimotor contingencies

"Imagine a team of engineers operating a remote-controlled underwater vessel exploring the remains of the Titanic, and imagine a villainous aquatic monster that has interfered with the control cable by mixing up the connections to and from the underwater cameras, sonar equipment, robot arms, actuators, and sensors. What appears on the many screens, lights, and dials, no longer makes any sense, and the actuators no longer have their usual functions. What can the engineers do to save the situation? By observing the structure of the changes on the control panel that occur when they press various buttons and levers, the engineers should be able to deduce which buttons control which kind of motion of the vehicle, and which lights correspond to information deriving from the sensors mounted outside the vessel, which indicators correspond to sensors on the vessel's tentacles, and so on." (O'Regan & Noë 2001, p. 940. A sensorimotor account of vision and visual consciousness)

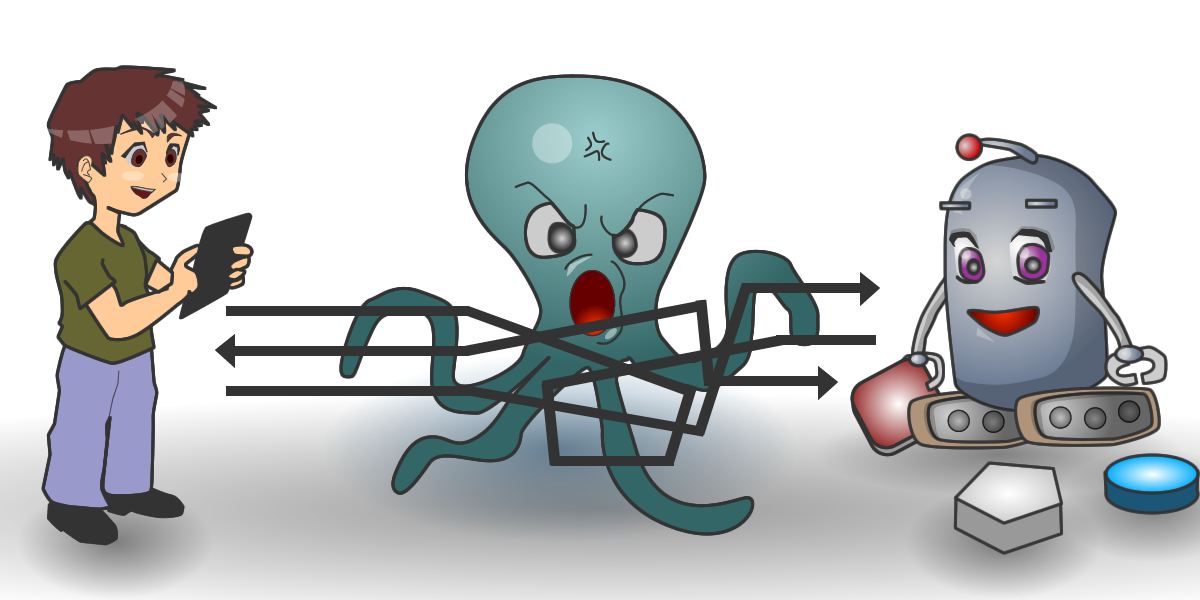

Figure 1 illustrates O'Regan & Noë's idea, with the underwater vessel replaced by Little AI. The player must observe the structure of the changes on the control panel when they press the commands to deduce which buttons control which kind of motion of the robot.

Reason is evolutionary

"Reason is evolutionary, in that abstract reason builds on and makes use of forms of perceptual and motor inference present in "lower" animals. The result is a Darwinism of reason, a rational Darwinism: Reason, even in its most abstract form, makes use of, rather than transcends, our animal nature. The discovery that reason is evolutionary utterly changes our relation to other animals and changes our conception of human beings as uniquely rational. Reason is thus not an essence that separates us from other animals; rather, it places us on a continuum with them." (Anderson 2003, p. 106. Embodied cognition: A field guide, citing Lakoff and Johnson.)

How to implement agents that can play Little AI

General approach

Georgeon O., Mille A., Gay S. (2016). Intelligence artificielle sans données ontologiques sur une réalité présupposée [Artificial intelligence without using ontological data about a presupposed reality]. Intellectica 65: 143-168. hal-01379575

Georgeon O. & Cordier A. (2014). Inverting the interaction cycle to model embodied agents. Procedia Computer Science, 41: 243-248. hal-01131263

Georgeon O., Marshall J., & Manzotti R. (2013). ECA: An enactivist cognitive architecture based on sensorimotor modeling. Biologically Inspired Cognitive Architectures 6: 46-57. hal-01339190

Georgeon O., Marshall J. & Gay S. (2012). Interactional motivation in artificial systems: Between extrinsic and intrinsic motivation. Second International Conference on Development and Learning and on Epigenetic Robotics (ICDL-EPIROB 2012), pp. 1-2. hal-01353128

Levels 0 to 4

Georgeon O., Casado R., & Matignon L. (2015). Modeling biological agents beyond the reinforcement-learning paradigm. Procedia Computer Science, 71: 17-22. hal-01251602

Levels 5 to 10

Georgeon O. & Boltuc P. (2016). Circular Constitution of Observation in the Absence of Ontological Data. Constructivist Foundations 12(1): 17–19. hal-01399830

Georgeon O., Bernard F., & Cordier A. (2015). Constructing phenomenal knowledge in an unknown noumenal Reality. Procedia Computer Science, 71: 11-16. hal-01231511

Georgeon O. & Hassas S. (2013). Single Agents Can Be Constructivist too. Constructivist Foundations 9(1): 40-42. hal-01339271

Levels 11 to 17

Georgeon O. & Marshall J. (2013). Demonstrating sense-making emergence in artificial agents: A method and an example. International Journal of Machine Consciousness 5(2): 131-144. hal-01339177

Georgeon O., Wolf C., & Gay S. (2013). An Enactive Approach to Autonomous Agent and Robot Learning. Third Joint International Conference on Development and Learning and on Epigenetic Robotics, IEEE ed. Osaka, Japan, pp. 1-6. hal-01339220

Georgeon O. & Ritter F. (2012). An intrinsically-motivated schema mechanism to model and simulate emergent cognition. Cognitive Systems Research 15-16: 73-92. hal-01353099

Learn more

Learn more about developmental artificial intelligence and how to program agents that can play Little AI in our free Massive Open Online Course (MOOC).